Artificial intelligence enables pervasive computing and becoming an omnipresent feature of everyday life.

From datacenters to smartphones and personal assistants, there is a compelling need to develop bespoke hardware that is energy-efficient and that supports the increasingly multi-faceted functionality of AI systems. The field of “Artificial Intelligence Hardware” is rapidly evolving, from custom neural network accelerators and event-based neuromorphic chips designed to process sensory information at the edge, to powerful machine learning platforms in the cloud.

With this post, we help the community broaden their understanding of what constitutes AI hardware and become aware of the sheer richness of this vast subject.

CPU (Central Processing Unit)

In artificial intelligence domain the processor and motherboard define the platform to support GPU, to dominates performance in most cases.

There is also the reality of having to spend a significant amount of effort with data analysis and cleanup to prepare for training in GPU and this is often done on the CPU. The CPU can also be the main compute engine when GPU limitations such as onboard memory availability require it.

The two recommended CPU platforms Intel Xeon W and AMD Threadripper Pro offer excellent reliability, can supply the needed PCI-Express lanes for multiple GPUs, and excellent memory performance in CPU space. Intel platform would be preferable if your workflow can benefit from some of the tools in the Intel oneAPI AI Analytics Toolkit.

It is recommended workstations with single-socket CPU to lessen memory mapping issues across multi-CPU interconnects which can cause problems mapping memory to GPUs. This class of processors also supports 8 memory channels, which can have a significant impact on performance for CPU-bound workloads.

At least 4 cores for each GPU accelerator is recommended. However, if your workload has a significant CPU compute component then 32 or even 64 cores could be ideal. In any case, processor with 16-core would generally be considered minimal for this type of workstation.

GPU (Graphics Processing Unit)

NVIDIA dominates for GPU compute acceleration. Their GPUs will be the most supported and easiest to work with. There are various manufacturers, and other emerging ML acceleration processors that have potential, but their availability and usability at this time will preclude our recommending them.

How much VRAM does AI need?

This is dependent on the model training feature space. Memory capacity on GPUs has been limited and ML frameworks have been constrained by available VRAM.

This is why it’s common to do “data and feature reduction” prior to training. However, the field has developed with great success despite these limitations! 8GB of memory per GPU is considered minimal and could definitely be a limitation for lots of applications. 16 to 24GB is fairly common, and readily available on high-end video cards.

Multiple GPUs improve performance in AI

Fortunately, multi-GPU support is now common in AI applications. We default to multiple video cards in our recommended configurations, but the benefit this offers may be limited by the development work you are doing. Multi-GPU acceleration must be supported in the framework or program being used.

Memory (RAM)

The first consideration is to have at least double the amount of CPU memory as there is total GPU memory in the system. For example, a system with 2x GeForce RTX 3090 GPUs would have 48GB of total VRAM – so the system should be configured with 128GB.

The second consideration is how much data analysis will be needed. It is often necessary to be able to pull a full dataset into memory for processing and statistical work.

That could mean BIG memory requirements, as much as 1TB of system memory.

Storage (Hard Drive)

It’s recommended to use NVMe storage whenever possible, since data streaming speeds can become a bottleneck when data is too large to fit in system memory. Staging job runs from NVMe can reduce job run slowdowns.

SATA-based SSDs offer larger capacities which can be used for data that exceeds the capacity of typical NVMe drives. 8TB is commonly available for SATA SSDs.

Additionally, all of the above drive types can be configured in RAID arrays. This does add complexity to the system configuration.

Artificial Intelligence Hardware Market

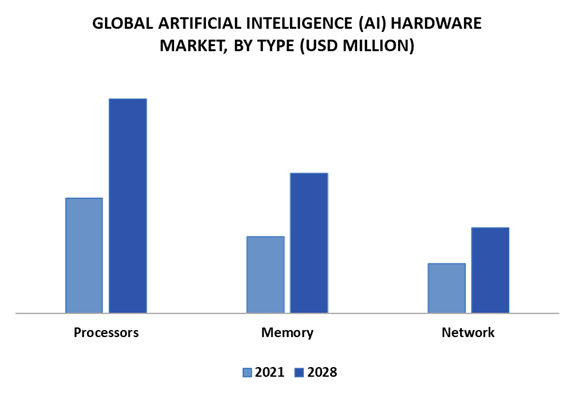

The growth in computing applications coupled with the significant improvements in commercial aspects of artificial intelligence is propelling industry growth. Technological advancements owing to rising adoption of AI and robotics in end-use industries like IT, automotive, healthcare, and manufacturing will drive the demand for the forecast period.

The revolution in the application of semiconductors intelligent computing, machine learning, and deep learning will be the factors driving growth in the artificial intelligence hardware market.

There are certain restraints and challenges faced which will hinder the overall market growth.

The factors such as lack of skilled workforce and the absence of standards are limiting the market growth. Also, complex integrated systems and the integration of AI solutions into the existing systems is a difficult task which confines the growth.

The increasing interests of people toward machine learning and computer vision are driving companies to invest in the development of high-speed computing processors, and high-performance computing.

AI applications have high memory-bandwidth requirements, since computing layers within deep neural networks must pass input data to thousands of cores as quickly as possible.

Memory is required to store input data, weight model parameters, and perform other functions during both inference and training, consider a model being trained to recognize the faces.

AI will be the facilitator of real-time conversations between networks, ensuring that all interactions receive the best-possible quality of service out of the connections available to them. Moreover, these networks will exist across all industries.

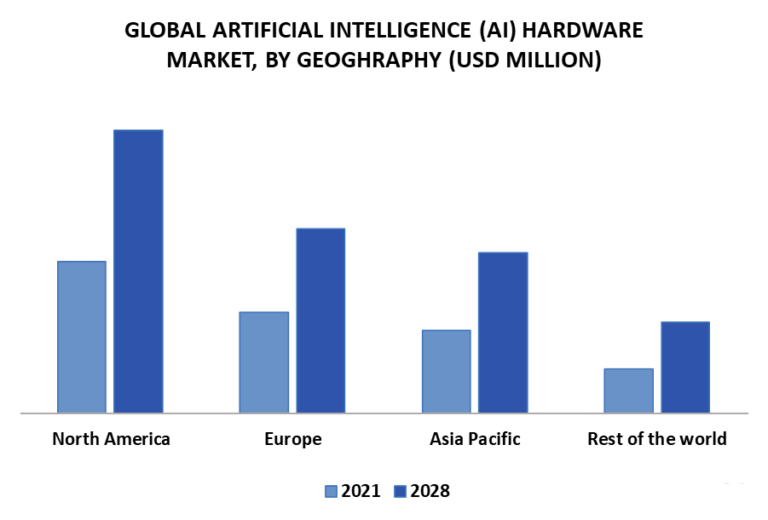

The computer industry has been responding to the emergence of a wider pan national and indeed international market. The U.S. is considered as the largest tech market globally.

In many other countries, the tech sector accounts for a significant portion of economic activity.

Exponential growth in the global sensors market, increasing adoption of software in applications such as continuous online learning, real-time data streaming, predictive analysis, and data modelling are factors which propel the growth of the market.

Artificial intelligence technologies are posed to bring transformative change to societies and industries world-wide.

AI and its role as a major driver of innovation, future growth, and competitiveness are internationally recognized. As a result, AI is at the top of national and international policy agendas around the globe.

I hope I’ve given you good understanding of artificial intelligence hardware requirements.

I am always open to your questions and suggestions.

You can share this post on LinkedIn, Facebook, Twitter, so someone in need might stumble upon this.

Recommended for you:

Become Machine Learning Engineer

What is Data Mining?

MOST COMMENTED

Tutorial

Important Methods in Matplotlib

Machine Learning

Bias and Variance Tradeoff Machine Learning

Tutorial

Multiclass and Multilabel Classification

Machine Learning

Reinforcement Learning in Machine Learning

Deep Learning

Alexnet Architecture Code

Machine Learning

Machine Learning Models Explained