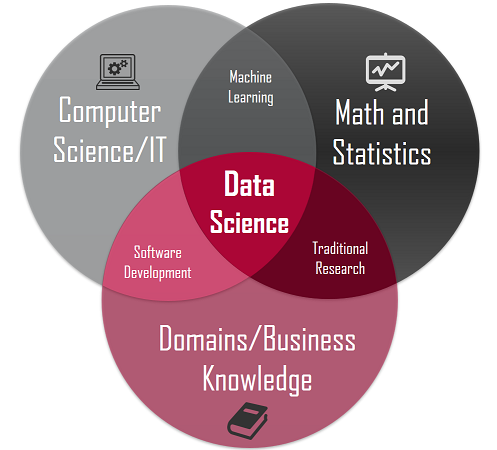

Unsupervised Learning

In unsupervised learning, we are dealing with unlabeled data or data of unknown structure. Using unsupervised learning techniques, we are able to explore the structure of our data to extract meaningful information without the guidance of a known outcome variable or reward function. K-means clustering is an example of an unsupervised learning algorithm.

Reinforcement Learning

In reinforcement learning, the goal is to develop a system that improves its performance based on interactions with the environment. Since the information about the current state of the environment typically also includes a so-called reward signal, we can think of reinforcement learning as a field related to supervised learning.

However, in reinforcement learning, this feedback is not the correct ground truth label or value but a measure of how well the action was measured by a reward function. Through the interaction with the environment, an agent can then use reinforcement learning to learn a series of actions that maximize this reward.

Model Parameters and Hyperparameters

In a machine learning model, there are two types of parameters:

- Model Parameters: These are the parameters in the model that must be determined using the training data set. These are the fitted parameters.

- Hyperparameters: These are adjustable parameters that must be tuned to obtain a model with optimal performance.

It is important that during training, the hyperparameters be tuned to obtain the model with the best performance (with the best-fitted parameters).

See also Machine Learning Model Optimization

Cross validation

Cross validation is a method of evaluating a machine learning model’s performance across random samples of the dataset. This assures that any biases in the dataset are captured. Cross validation can help us to obtain reliable estimates of the model’s generalization error, that is, how well the model performs on unseen data.

In K-fold cross validation, the dataset is randomly partitioned into training and testing sets. The model is trained on the training set and evaluated on the testing set. The process is repeated k-times. The average training and testing scores are then calculated by averaging over the k-folds.

Bias variance Trade-off

In machine learning, the bias variance trade-off is the property of a set of predictive models whereby models with a lower bias in parameter estimation have a higher variance of the parameter estimates across samples and vice versa. The bias variance dilemma or problem is the conflict in trying to simultaneously minimize these two sources of error that prevent supervised learning algorithms from generalizing beyond their training set:

The bias is an error from erroneous assumptions in the learning algorithm. High bias (overly simple) can cause an algorithm to miss the relevant relations between features and target outputs (underfitting).

The variance is an error from sensitivity to small fluctuations in the training set. High variance (overly complex) can cause an algorithm to model the random noise in the training data rather than the intended outputs (overfitting).

It is important to find the right balance between model simplicity and complexity.

Evaluation Metrics

In machine learning, there are several metrics that can be used for model evaluation. For example, a supervised learning (continuous target) model can be evaluated using metrics such as the R2 score, mean square error (MSE), or mean absolute error (MAE).

Furthermore, a supervised learning (discrete target) model, also referred to as a classification model, can be evaluated using metrics such as accuracy, precision, recall, f1 score, and the area under ROC curve (AUC).

Uncertainty Quantification

It is important to build machine learning models that will yield unbiased estimates of uncertainties in calculated outcomes. Due to the inherent randomness in the dataset and model, evaluation parameters such as the R2 score are random variables, and thus it is important to estimate the degree of uncertainty in the model.

Math Concepts

Basic Calculus: Most machine learning models are built with a dataset having several features or predictors. Hence, familiarity with multivariable calculus is extremely important for building a machine learning model. Here are the topics you need to be familiar with:

Functions of several variables; Derivatives and gradients; Step function, Sigmoid function, Logit function, ReLU (Rectified Linear Unit) function; Cost function; Plotting of functions; Minimum and Maximum values of a function.

Basic Linear Algebra: Linear algebra is the most important math skill in machine learning. A data set is represented as a matrix. Linear algebra is used in data preprocessing, data transformation, dimensionality reduction, and model evaluation. Here are the topics you need to be familiar with: Vectors; Norm of a vector; Matrices; Transpose of a matrix; The inverse of a matrix; The determinant of a matrix; Trace of a Matrix; Dot product; Eigenvalues; Eigenvectors.

Optimization Methods: Most machine learning algorithms perform predictive modeling by minimizing an objective function, thereby learning the weights that must be applied to the testing data in order to obtain the predicted labels. Here are the topics you need to be familiar with: Cost function/Objective function; Likelihood function; Error function; Gradient Descent Algorithm and its variants (e.g., Stochastic Gradient Descent Algorithm).

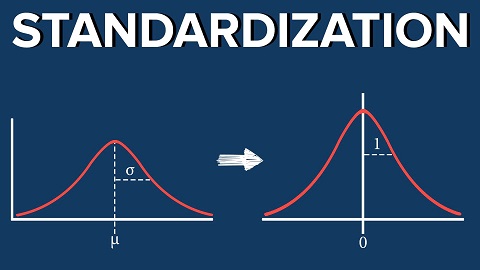

Statistics and Probability Concepts

Statistics and Probability are used for visualization of features, data preprocessing, feature transformation, data imputation, dimensionality reduction, feature engineering, model evaluation, etc. Here are the topics you need to be familiar with:

Mean, Median, Mode, Standard deviation/variance, Correlation coefficient and the covariance matrix, Probability distributions (Binomial, Poisson, Normal), p value, Bayes Theorem (Precision, Recall, Positive Predictive Value, Negative Predictive Value, Confusion Matrix, ROC Curve), Central Limit Theorem, R_2 score, Mean Square Error (MSE), A/B Testing, Monte Carlo Simulation.

Productivity Tools

A typical data analysis project may involve several parts, each including several data files and different scripts with code. Keeping all these organized can be challenging. Productivity tools help you to keep projects organized and to maintain a record of your completed projects. Some essential productivity tools for practicing data scientists include tools such as Unix/Linux, git and GitHub, RStudio, and Jupyter Notebook.

Recommended for you:

MOST COMMENTED

Tutorial

Important Methods in Matplotlib

Machine Learning

Bias and Variance Tradeoff Machine Learning

Tutorial

Multiclass and Multilabel Classification

Machine Learning

Reinforcement Learning in Machine Learning

Deep Learning

Alexnet Architecture Code

Machine Learning

Machine Learning Models Explained