In the context of machine learning, data normalization is an essential process for ensuring a more accurate prediction. It helps organizations make the best use of their datasets by consolidating and querying data from multiple sources. The main benefits of this process are cost savings, space savings, and accuracy improvements.

What is Data Normalization?

Normalization is a scaling technique in Machine Learning applied during data preparation to change the values of numeric columns in the dataset to use a common scale and it is required only when features of machine learning models have different ranges.

Data normalization is mainly used to reduce redundant data, thereby assisting in reducing the size of data for expediting the processing of information. In most cases, Data Normalization Techniques in Machine Learning are implemented in classification models.

- Data Normalization is the process of organizing data such that it seems consistent across all records and fields.

- It improves the cohesion of entry types, resulting in better data cleansing, lead creation, and segmentation.

Why do we need Data Normalization?

When dealing with huge data sets, normalization is usually essential to ensure you do not take data consistency and quality for granted. Since you cannot look for issues and resolve every data record in big data, it is critical to use the Normalization Techniques to transform data and ensure consistency.

- The Normalization Techniques in Machine Learning are becoming more effective and efficient.

- The data is translated into a format that everyone can understand; the data can be pulled from databases more quickly, and the data can be analyzed in a specified way.

Normalization Techniques in Machine Learning

Z-Score Normalization

The Z-Score value is one of the Normalization Techniques in Machine Learning that determines how much a data point deviates from the mean. It calculates the standard deviations that are below or above the mean. It might be anywhere between -3 and +3 standard deviations. Z-score normalization techniques is beneficial for data analysis that requires comparing a value to a mean (average) value, such as test or survey findings.

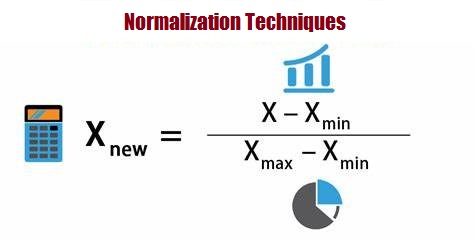

Min-Max Normalization

Linear normalization is arguably the easier and most flexible normalization technique. In laymen’s terms, it consists of establishing a new “base” of reference for each data point. Often called “max-min” normalization, this technique allows analysts to take the difference of the maximum x value and minimum x value in the set, and establish a base.

This is a good starting strategy, and in reality, analysts can normalize data points to any base once they have completed linear normalization.

Formula: (v – min A) / (max A – min A) * (new_max A – new_min A) + new_min A

Decimal Scaling Normalization

Decimal scaling is another way of normalizing. It works by rounding an integer to the nearest decimal point. It normalizes data by shifting the decimal point of the numbers. We divide each data value by the largest absolute value of the data to normalize the data using this approach. The data value, vi, is normalized to vi’ using the formula below.

Formula: v’ = v / 10^j

Where:

- v’ is the new value after decimal scaling is applied.

- The attribute’s value is represented by V.

- The decimal point movement is now defined by integer J.

Clipping Normalization

Clipping is not exactly a normalization technique, but it’s a tool analysts use before or after using normalization techniques. In short, clipping consists of establishing maximum and minimum values for the dataset and requalifies outliers to this new max or mins.

Imagine you have a dataset consisting of number [14, 12, 19, 11, 15, 17, 18, 95]. As you can see, the number 95 is a big outlier. We can clip it out of the data by reassigning a new high. Since your range without 95 is 11–19, you could reassign it a value of 19.

Note that clipping does NOT remove points from a data set, it reassigns data in a dataset. A quick check to make sure you’ve done it right is to make sure the data population N is the same before and after clipping, but that no outliers exist.

Normalize or Standardize ?

Normalization is good to use when you know that the distribution of your data does not follow a Gaussian distribution. This can be useful in algorithms that do not assume any distribution of the data, like K-Nearest Neighbors.

On the other hand, Standardization can be helpful in cases where the data follows a Gaussian distribution. Unlike normalization, standardization does not have a bounding range. So, even if you have outliers in your data, they will not be affected by standardization.

However, the choice of using normalization or standardization will depend on your problem and the machine learning algorithm you are using. There is no fast rule to tell you when to normalize or standardize your data. You can always start by fitting your model to raw, normalized, and standardized data and comparing the performance for the best results.

It is recommended to fit the scaler on the training data and then use it to transform the testing data. This would avoid any data leakage during the model testing process.

Related posts you might like:

MOST COMMENTED

Tutorial

Important Methods in Matplotlib

Machine Learning

Bias and Variance Tradeoff Machine Learning

Tutorial

Multiclass and Multilabel Classification

Machine Learning

Reinforcement Learning in Machine Learning

Deep Learning

Alexnet Architecture Code

Machine Learning

Machine Learning Models Explained