When creating a machine learning project, it is not always a case that we come across the clean and formatted data. So, we use data preprocessing task.

A good data preprocessing in machine learning is the most important factor that can make a difference between a good and a poor machine learning model. In this post we will understand the need of data preprocessing and then present a nutshell view of various steps that are involved in this process.

What is Data Preprocessing ?

It is the process of transforming raw data into a useful, understandable format. Real-world or raw data usually has inconsistent formatting, human errors, and can also be incomplete. It resolves such issues and makes datasets more complete and efficient to perform data analysis.

It’s a crucial process that can affect the success of data mining and machine learning projects. It makes knowledge discovery from datasets faster and can ultimately affect the performance of machine learning models.

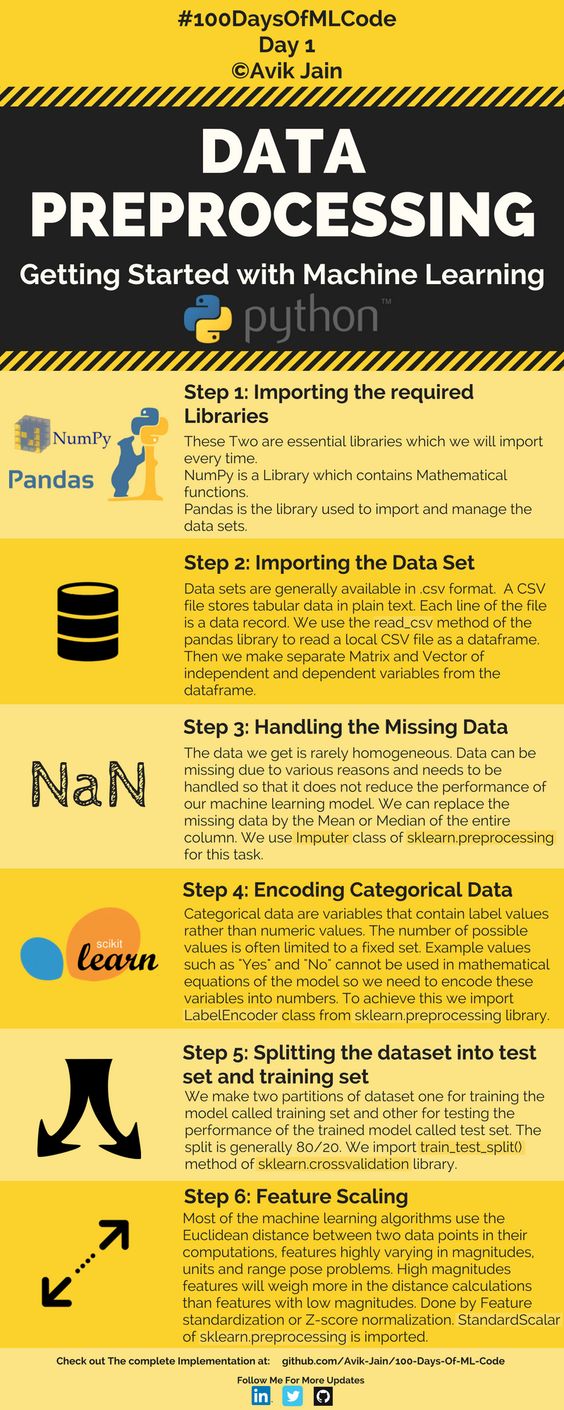

Data Preprocessing Steps

Data Preprocessing in Machine Learning can be divide into three main parts. There are various steps in each of these 3 parts. Depending on the data and machine learning algorithm, not all steps might be required though.

Data Integration:

In real situation, you might have to extract data from various sources and have to integrate it. Not all sources might be giving you data in same format. For e.g. one source might be giving you data in csv and other in XML. So during data integration from various sources, you will have to bring entire data in a common format.

Data Cleaning:

The attributes may have incorrect data types and are not in sync with the data dictionary. Replace the special characters for example:

- Replace $ and comma signs in the column of Sales/Income/Profit.

- Making the format of the date column consistent with the format of the tool used for data analysis.

- Check for null or missing values, also check for the negative values. The relevancy of the negative values depends on the data.

- Smoothing of the noise present in the data by identifying and treating for outliers.

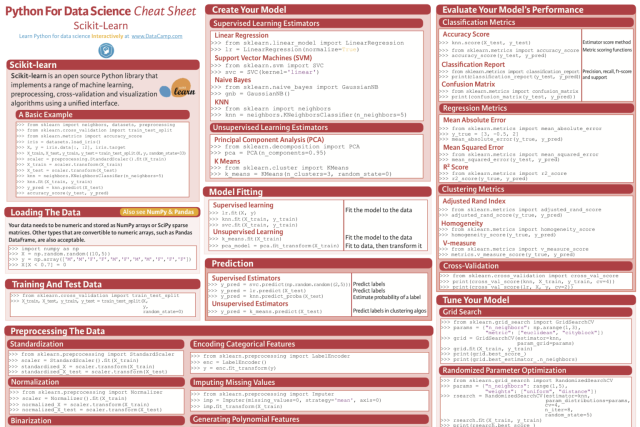

Data Transformation

The final step is to transform the data. The specific algorithm you are working with and the knowledge of the problem will influence this step and you will very likely have to revisit different transformations of your preprocessed data as you work on your problem.

Three common data transformations are scaling, attribute decompositions and attribute aggregations.

Why is Preprocessing required?

As you know, a database is a collection of data points. Data points are also called observations, data samples, events, and records.

Each sample is described using different characteristics, also known as features or attributes. Preprocessing is essential to effectively build models with these features.

Numerous problems can arise while collecting data. You may have to aggregate data from different data sources, leading to mismatching data formats, such as integer and float.

If you’re aggregating data from two or more independent datasets, the gender field may have two different values for men: man and male. Likewise, if you’re aggregating data from ten different datasets, a field that’s present in eight of them may be missing in the rest two.

By preprocessing data, we make it easier to interpret and use. This process eliminates inconsistencies or duplicates in data, which can otherwise negatively affect a model’s accuracy. Preprocessing also ensures that there aren’t any incorrect or missing values due to human error or bugs. In short, employing data preprocessing techniques makes the database more complete and accurate.

Characteristics of quality data

For machine learning algorithms, nothing is more important than quality training data. Their performance or accuracy depends on how relevant, representative, and comprehensive the data is. let’s look at some factors contributing to data quality.

- Accuracy: As the name suggests, accuracy means that the information is correct. Outdated information, typos, and redundancies can affect a dataset’s accuracy.

- Consistency: The data should have no contradictions. Inconsistent data may give you different answers to the same question.

- Completeness: The dataset shouldn’t have incomplete fields or lack empty fields. This characteristic allows data scientists to perform accurate analyses as they have access to a complete picture of the situation the data describes.

- Validity: A dataset is considered valid if the data samples appear in the correct format, are within a specified range, and are of the right type. Invalid datasets are hard to organize and analyze.

- Timeliness: Data should be collected as soon as the event it represents occurs. As time passes, every dataset becomes less accurate and useful as it doesn’t represent the current reality. Therefore, the topicality and relevance of data is a critical data quality characteristic.

In summary

In this post you learned the essence of data preprocessing for machine learning. You discovered a three step for preprocessing:

Preprocessing is a large subject that can involve a lot of iterations, exploration and analysis. Getting good at data preprocessing will make you a master at machine learning.

Recommended for you:

Preprocessing

MOST COMMENTED

Tutorial

Important Methods in Matplotlib

Machine Learning

Bias and Variance Tradeoff Machine Learning

Tutorial

Multiclass and Multilabel Classification

Machine Learning

Reinforcement Learning in Machine Learning

Deep Learning

Alexnet Architecture Code

Machine Learning

Machine Learning Models Explained