Business users rely on data to make just about every business decision. Hence, it is important to make raw data usable for analytics. Therefore, it’s vital for organizations to employ individuals who understand what clean data looks like and how to shape raw data into usable forms. This is where data wrangling comes into play.

What is Data Wrangling ?

It is the act of extracting data and converting it to a workable format. It is the process of removing errors and combining complex data sets to make them more accessible and easier to analyze.

Data wrangling process, also known as a data munging process, consists of reorganizing, transforming and mapping data from raw form into usable and valuable for a variety of downstream uses including analytics.

It enables businesses to tackle more complex data in less time, produce more accurate results, and make better decisions. The exact methods vary from project to project depending upon your data and the goal you are trying to achieve.

Importance

Some may question if the amount of work and time devoted to wrangling of data is worth the effort. The importance of it can be described as:

- Getting all data from various sources into a centralized location so it can be make raw data usable. Accurately wrangled data guarantees that quality data is entered into the downstream analysis.

- Piecing together raw data according to the required format and understanding the business context of data. Businesses use this standardized data to perform crucial, cross dataset analytics.

- Cleansing the data from the noise or flawed, and missing elements.

- It acts as a preparation stage for the data mining process, which involves gathering data and making sense of it.

- Helping business users make concrete, timely decisions.

- Insights gained during the data wrangling process can be invaluable. They will affect the future course of a project. Skipping this step will result in poor data models that impact an organization’s decision making.

Unfortunately, high-level decision makers who prefer quick results may be surprised by how long it takes to get data into a usable format. And as businesses face budget and time pressures, this makes a data wrangler’s job more difficult.

The job involves careful management of expectations, as well as technical know-how.

Data wrangling is the process of converting and mapping raw data and getting it ready for analysis.

Examples

The most commonly examples are for:

- Merging multiple data sources into a single dataset.

- Identifying outliers in data and removing them to allow for proper analysis.

- Cleaning up inconsistent values and tags.

- Identifying gaps in data, and removing or filling them.

- Deleting irrelevant or unnecessary data.

- Detect corporate fraud.

- Support data security.

- Ensure accurate and recurring data modeling results.

- Ensure business compliance with industry standards.

- Customer Behavior Analysis.

- Reduce time spent on preparing data for analysis.

- Recognize the business value of your data.

Data wrangling vs. Data Cleaning

Some use the terms data cleaning and wrangling interchangeably. It’s because they share some common attributes and tools. But there are some important differences between them:

Data wrangling refers to the process of collecting raw data, cleaning it, mapping it, and storing it in a useful format. The term is often used to describe each of these steps individually, as well as in combination.

Data cleaning, meanwhile, is a single aspect of the data wrangling process. Data cleaning involves sanitizing a data set by removing unwanted observations, outliers, fixing structural errors and typos, standardizing units of measure, validating, and so on. Data cleaning tends to follow more precise steps.

The distinction between data cleaning and wrangling is not always clear. However, you can generally think of data wrangling as an umbrella task. Data cleaning falls under this umbrella, alongside a range of other activities. These can involve planning which data you want to collect, carrying out exploratory analysis, cleansing and mapping the data, creating data structures, and storing the data for future use.

Data Wrangling Tools

There are different tools that can be used for gathering, importing, structuring, and cleaning data.

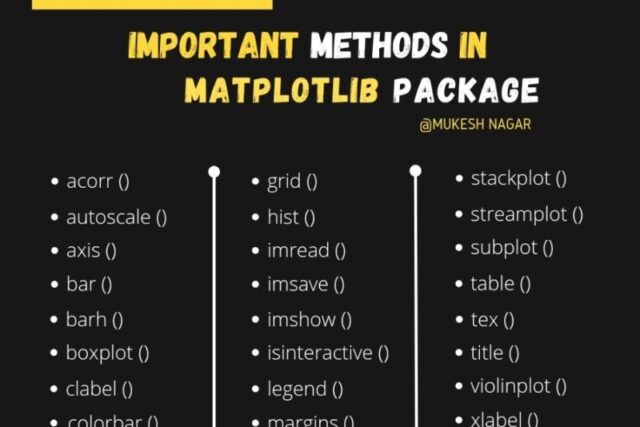

It is most often accomplished with Python through the use of packages like NumPy, Pandas, Matplotlib, Plotly and Theano. Basic software is Excel Power Query, which facilitates manual wrangling. You can use automated tools for data wrangling, where the software allows you to validate data mappings and scrutinize data samples at every step of the transformation process.

Top Data Wrangling Skills

It is one of the essential skills a data scientist must have. Because, you will rarely get flawless data in real scenarios.

As a data scientist, you need to know your data intimately and look out to enrich the data. Hence it becomes imperative to have a good know-how of the business context of the data, so you can easily interpret, cleanse and transform it into ingestible form.

- To be able to perform series of data transformations like merging, ordering, aggregating.

- To use programming languages like R, Python, Julia, SQL.

- To make logical judgments based on underlying business context.

Steps

Each data project requires a unique approach to ensure the final dataset is reliable and accessible. Hence, the tasks required in data wrangling depend on what transformations you need to carry out.

These are commonly referred to as data wrangling steps. But the process is iterative. Some of the steps may not be necessary, others may need repeating, and they will rarely occur in the same order. But you need to know what they are.

- Discovery:

It refers to the process of familiarizing yourself with data so you can conceptualize how you might use it. During process of discovery, you may identify patterns in the data, along with obvious issues, such as missing or incomplete values that need to be addressed. This is an important step, as it will inform every activity that comes afterward. - Structuring:

Data structuring is the process of taking raw data and transforming it to be more readily leveraged. Freshly collected data are usually in an unstructured format. They lack an existing model and are completely disorganized. For instance, you might parse HTML code scraped from a website, pulling out what you need and discarding the rest. - Cleaning:

Data cleaning is the process of removing inherent errors in data that might distort your analysis or render it less valuable. You can start applying algorithms to tidy it up. You can automate a range of algorithmic tasks using tools like Python and R. They can be used to identify outliers, delete duplicate values, standardize systems of measurement, and so on. - Enriching:

Once you understand your existing data, you must determine whether you have all of the data necessary for the project at hand. Data enrichment involves combining your dataset with data from other sources. Your goal could be to accumulate a greater number of data points to improve the accuracy of an analysis.

If you decide that enrichment is necessary, you need to repeat the steps above for any new dataset. - Validating:

Data validation refers to the process of verifying that your data is both consistent and of a high quality. We can do this using preprogrammed scripts that check the data’s attributes against defined rules. This is also a good example of an overlap between data cleaning and wrangling. Because you’ll probably find errors, you may need to repeat this step several times. - Publishing:

Once your data has been validated, you can publish it. This means making the data accessible by depositing them into a new database or architecture. End users might include data scientists or engineers. The format you use to share the information, such as a written report or electronic file, will depend on your data and goals.

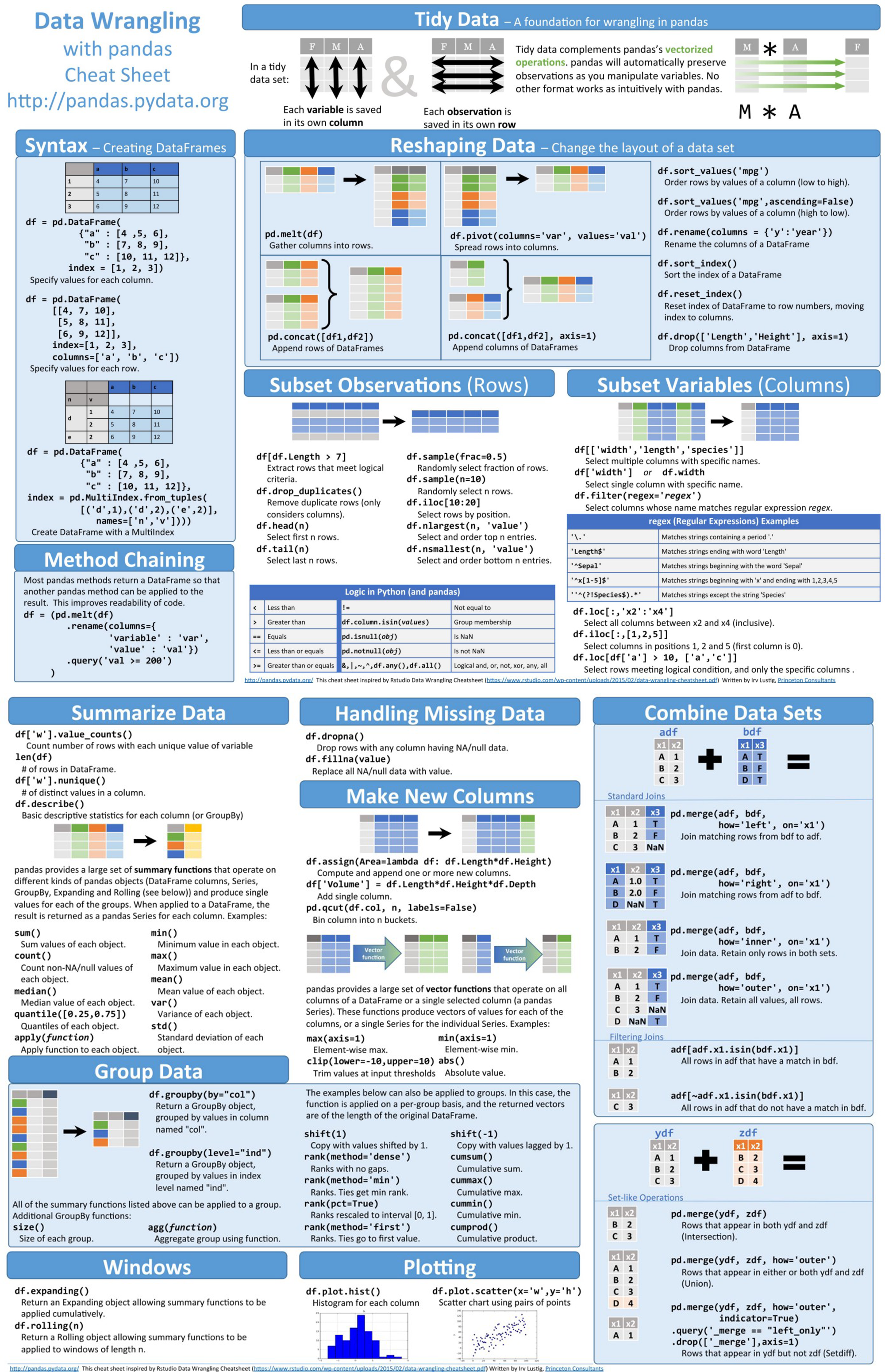

Data Wrangling Infographic with Pandas

Recommended for you:

Data Cleaning in Machine Learning

Data PreProcessing

MOST COMMENTED

Tutorial

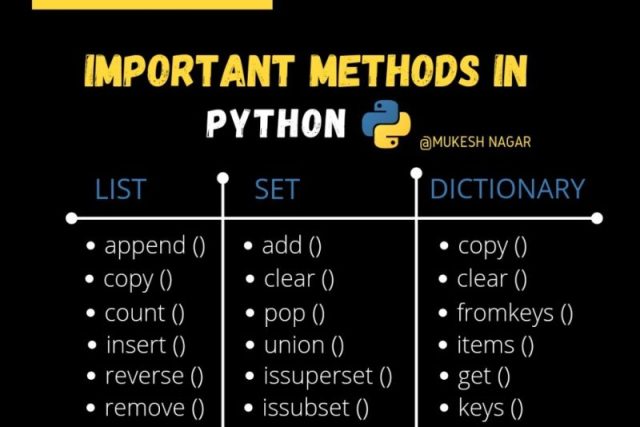

Important Methods in Matplotlib

Machine Learning

Bias and Variance Tradeoff Machine Learning

Tutorial

Multiclass and Multilabel Classification

Machine Learning

Reinforcement Learning in Machine Learning

Deep Learning

Alexnet Architecture Code

Machine Learning

Machine Learning Models Explained