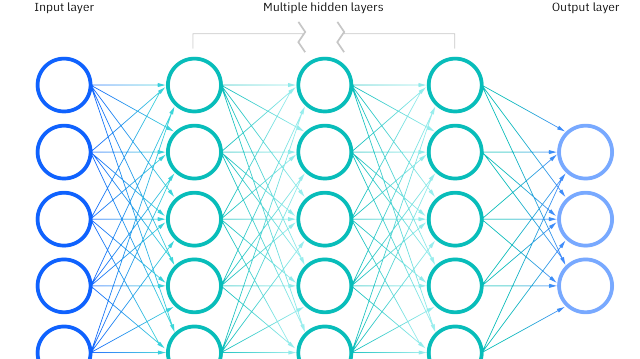

Neural networks also known as artificial neural networks are a subset of machine learning and are at the heart of deep learning algorithms. Their name and structure are inspired by the human brain, mimicking the way that biological neurons signal to one another.

Neural networks are comprised of a node layers, containing input layer, hidden layers, and an output layer. Each node or artificial neuron connects to another node and has an associated weight and threshold. If the output of any individual node is above the specified threshold value, that node is activated, sending data to the next layer of the network. Otherwise, no data is passed along to the next layer of the network. Neural networks rely on training data to learn and improve their accuracy over time. These algorithms are powerful tools in computer science and artificial intelligence, allowing us to classify and cluster data at a high velocity.

Convolutional Neural Networks

Convolutional neural networks (CNN) are a class of neural networks that employ convolutional layers to extract features from the input. CNN is frequently used in computer vision because they can process visual data with fewer moving parts, i.e., they’re efficient and run well on computers. The basic idea is that at each layer, one-dimensionality is dropped out of the input; so, for a given pixel, there is a pooling layer for just spatial information, then another for just color channels, then one more for channel-independent filters or higher-level activation functions.

CNN is widely used to identify satellite images, process medical images, forecast time series, and detect anomalies.

Long Short Term Memory

LSTM is a type of Recurrent Neural Network (RNN) that can learn and memorize long-term dependencies. Recalling past information for long periods is the default behavior.

LSTM retains information over time. They are useful in time-series prediction because they remember previous inputs. LSTM has a chain-like structure where four interacting layers communicate in a unique way. Besides time-series predictions, LSTM networks are great for detecting patterns and work well in NLP tasks, image recognition, classification, etc.

Recurrent Neural Network

Recurrent Neural Network (RNN) is an artificial neural network that processes data sequentially. RNN can better understand arbitrary sequential data and better at predicting sequential patterns. RNN has connections that form directed cycles, which allow the outputs from the LSTM to be fed as inputs to the current phase.

RNNs are commonly used for image captioning, time-series analysis, natural-language processing, handwriting recognition, and machine translation. The main issue with RNNs is that they require very large amounts of memory, so many are specialized for a single sequence length. They cannot process input sequences in parallel because the hidden state must be saved across time steps. This is because each time step depends on the previous time step, and future time steps cannot be predicted by looking at only one past time step.

Generative Adversarial Networks

Generative Adversarial Networks (GANs) are neural networks with two components: a generator, which learns to generate fake data, and a discriminator, which learns from that false information.

The usage of GANs has increased over a period of time. They can be used to improve astronomical images and simulate gravitational lensing for dark-matter research. Video game developers use GAN to upscale low resolution, 2D textures in old video games by recreating them in 4K or higher resolutions via image training.

GAN helps generate realistic images and cartoon characters, create photographs of human faces, and render 3D objects.

Autoencoders: Compositional Pattern Producing Networks

Compositional Pattern Producing Network (CPPN) is a kind of autoencoder, meaning they’re neural networks designed for dimensionality reduction. As their name suggests, CPPN creates patterns from an input set. The patterns created are not just geometric shapes but very creative and organic-looking forms. CPPN Autoencoders can be used for purposes such as pharmaceutical discovery, popularity prediction, and image processing.

Recommended for you:

LSTM Architecture Explained

Recurrent Neural Network

MOST COMMENTED

Tutorial

Important Methods in Matplotlib

Machine Learning

Bias and Variance Tradeoff Machine Learning

Tutorial

Multiclass and Multilabel Classification

Machine Learning

Reinforcement Learning in Machine Learning

Deep Learning

Alexnet Architecture Code

Machine Learning

Machine Learning Models Explained