In a world of Artificial Intelligence, Classification and Prediction is one the most important aspects of Machine Learning and Naive Bayes is a simple but surprisingly powerful algorithm for predictive modelling, according to Machine Learning Industry Experts.

Concept Behind Naive Bayes

It assumes the features that go into the model is independent of each other. That is changing the value of one feature, does not directly influence or change the value of any of the other features used in the algorithm.

There is a significant advantage. Since it is a probabilistic model, the algorithm can be coded up easily and the predictions made real quick.

Because of this, it is easily scalable and is traditionally the algorithm of choice for real-world applications that are required to respond to user’s requests instantaneously.

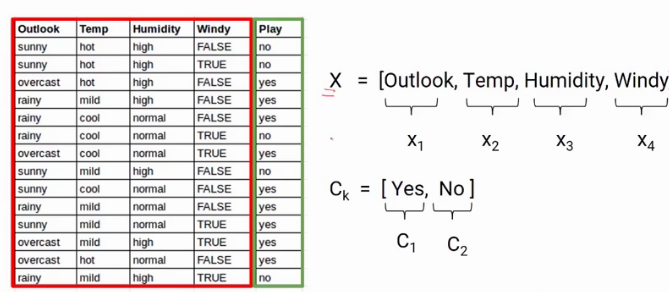

Let’s understand this algorithm works through an example. We have a dataset with some features Outlook, Temp, Humidity, and Windy, and the target is to predict whether a person will play or not. So, we are representing features as X like X1, X2, and so on. Similarly, the classes are represented as C1 and C2.

In this algorithm for every observation, we determine the probability that it belongs to class 1 or class 2. For example, we first find out the probability that the person will play given that Outlook is Sunny, Temperature is Hot, Humidity is High and it is not windy as shown below. Later, we will also calculate the probability that the person will not play given the same conditions.

What is Naive Bayes algorithm?

It uses the Bayes’ theorem with strong independence assumptions between the features to procure results. That means that the algorithm assumes that each input variable is independent. For example, if you use this algorithm for sentiment analysis, given the sentence ‘I like Harry Potter’, the algorithm will look at the individual words and not the entire sentence. In a sentence, words that stand next to each other influence the meaning of each other, and the position of words in a sentence is also important. However, phrases like ‘I like Harry Potter’ and ‘Potter I like Harry’ are the same for the algorithm.

It turns out that the algorithm can effectively solve many complex problems. For example, building a text classifier with Bayes is much easier than algorithms such as neural networks. The model works well even with insufficient or mislabeled data, so you don’t have to ‘feed’ it hundreds of thousands of examples before you can get something reasonable out of it.

It is called Naive because it assumes that each input variable is independent. This is unrealistic for real data; however, the technique is very effective on many complex problems. The thought behind Bayes classification is to try to classify the data by maximizing P(O | Ci) * P(Ci) using Bayes theorem of posterior probability (where O is the Object or tuple in a dataset and “i” is an index of the class).

Types of Naive Bayes

Let’s discuss different types of Bayes algorithm and which is used when.

Gaussian:

This type is used when variables are continuous in nature. It assumes that all the variables have a normal distribution. So if you have some variables which do not have this property, you might want to transform variables to the features having distribution normal.

Multinomial:

It is used when the features represent the frequency.

Suppose you have a text document and you extract all the unique words and create multiple features where each feature represents the count of the word in the document. In such a case, we have a frequency as a feature. In such a scenario, we use multinomial Naive Bayes.

It ignores the non-occurrence of the features. So, if you have frequency 0 then the probability of occurrence of that feature will be 0 hence multinomial naive Bayes ignores that feature. It works well with text classification problems.

Bernoulli:

This is used when features are binary. So, instead of using the frequency of the word, if you have discrete features in 1s and 0s that represent the presence or absence of a feature. In that case, the features will be binary and we will use Bernoulli Naive Bayes.

Also, this method will penalize the non-occurrence of a feature.

What is it used for?

- Real-time Prediction:

- This Algorithm is fast and always ready to learn hence best suited for real-time predictions.

- Multi-class Prediction:

- The probability of multi-classes of any target variable can be predicted using a Naive Bayes algorithm.

- Recommendation system:

- Naive Bayes classifier with the help of Collaborative Filtering builds a Recommendation System. This system uses data mining and machine learning techniques to filter the information which is not seen before and then predict whether a user would appreciate a given resource or not.

- Text Classification and Spam Filtering:

- Due to its better performance with multi-class problems and its independence rule, the algorithm performs better or has a higher success rate in text classification; therefore, it is used in Sentiment Analysis and Spam filtering.

Advantages and Disadvantages of Naive Bayes

- Advantages

- Easy to implement.

- Fast

- It requires less training data.

- It is highly scalable.

- It can make probabilistic predictions.

- Can handle both continuous and discrete data.

- Insensitive towards irrelevant features.

- It can work easily with missing values.

- Easy to update on the arrival of new data.

- Best suited for text classification problems.

- Disadvantages

- The strong assumption about the features to be independent is hardly true in real-life applications.

- Chances of loss of accuracy.

- If the category of any categorical variable is not seen in the training data set, then the model assigns a zero probability to that category, and then a prediction cannot be made.

Recommended for you:

MOST COMMENTED

Tutorial

Important Methods in Matplotlib

Machine Learning

Bias and Variance Tradeoff Machine Learning

Tutorial

Multiclass and Multilabel Classification

Machine Learning

Reinforcement Learning in Machine Learning

Deep Learning

Alexnet Architecture Code

Machine Learning

Machine Learning Models Explained