Regularization

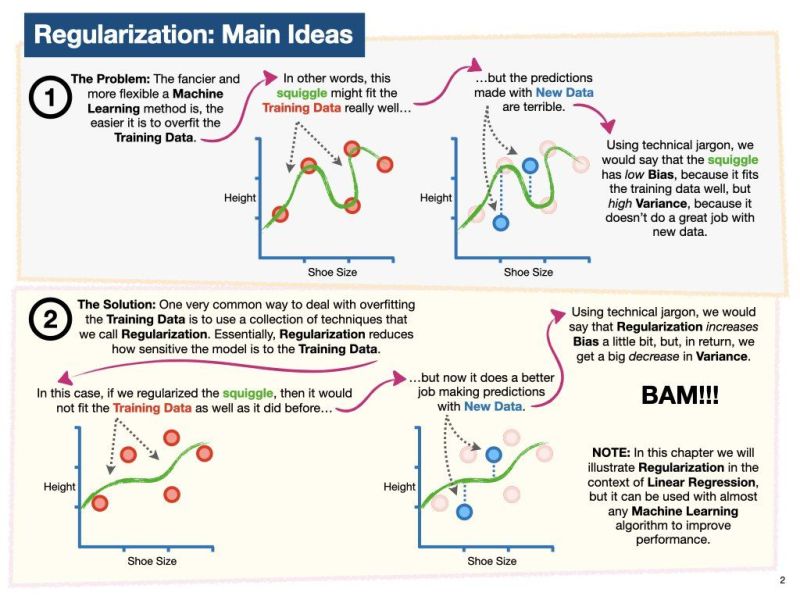

Regularization is one of the most important concepts of machine learning. It is a technique to prevent the model from overfitting by adding extra information to it.

Sometimes the machine learning model performs well with the training data but does not perform well with the test data. It means the model is not able to predict the output when deals with unseen data by introducing noise in the output, and hence the model is called overfitted. This problem can be deal with a regularization technique.

This technique can be used in such a way that it will allow to maintain all variables or features in the model by reducing the magnitude of the variables. Hence, it maintains accuracy as well as a generalization of the model.

“In regularization technique, we reduce the magnitude of the features by keeping the same number of features.”

Techniques of Regularization

There are mainly two types of regularization techniques, which are given below:

- Ridge Regression

- Lasso Regression

- Elastic Net

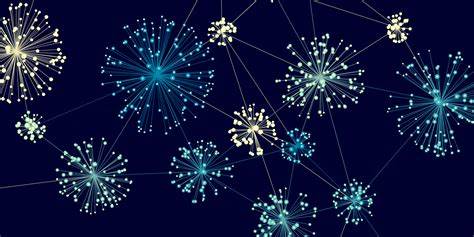

Ridge Regression

- It is also called as L2 regularization.

- Ridge regression is one of the types of linear regression in which a small amount of bias is introduced so that we can get better long-term predictions.

- Ridge regression is a regularization technique, which is used to reduce the complexity of the model.

- In this technique, the cost function is altered by adding the penalty term to it. The amount of bias added to the model is called Ridge Regression penalty. We can calculate it by multiplying with the lambda to the squared weight of each individual feature.

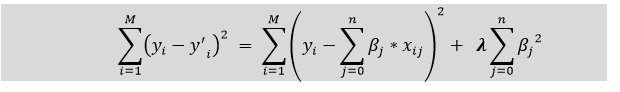

- The equation for the cost function in ridge regression will be:

- In the above equation, the penalty term regularizes the coefficients of the model, and hence ridge regression reduces the amplitudes of the coefficients that decreases the complexity of the model.

- As we can see from the above equation, if the values of λ tend to zero, the equation becomes the cost function of the linear regression model. Hence, for the minimum value of λ, the model will resemble the linear regression model.

- A general linear or polynomial regression will fail if there is high collinearity between the independent variables, so to solve such problems, Ridge regression can be used.

- It helps to solve the problems if we have more parameters than samples.

Lasso Regression

- It is also called as L1 regularization.

- Lasso regression is another regularization technique to reduce the complexity of the model.

- It stands for Least Absolute and Selection Operator.

- It is similar to the Ridge Regression except that the penalty term contains only the absolute weights instead of a square of weights.

- Since it takes absolute values, hence, it can shrink the slope to 0, whereas Ridge Regression can only shrink it near to 0.

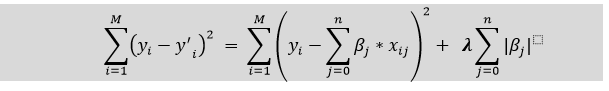

- The equation for the cost function of Lasso regression will be:

- Hence, the Lasso regression can help us to reduce the overfitting in the model as well as the feature selection.

Elastic Net

Elastic Net is a technique combining ridge and lasso’s regularization terms techniques to regularize regression models. The technique combines both the lasso and ridge regression methods by learning from their shortcomings to improve the regularization of statistical models.

Elastic Net technique improves lasso’s limitations, i.e., where lasso takes a few samples for high dimensional data. The elastic net procedure provides the inclusion of n number of variables until saturation. If the variables are highly correlated groups, lasso tends to choose one variable from such groups and ignore the rest entirely.

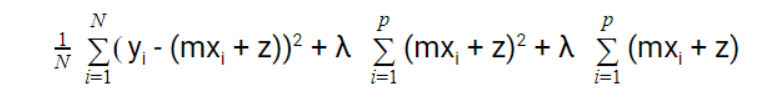

Elastic Net = Lasso Regression + Ridge Regression

The equation for Elastic Net is given below:

When, the lasso regression can cause a small bias in the model where the prediction is too dependent upon a particular variable. In these cases, elastic Net is proved to better it combines the regularization of both lasso and Ridge.

The advantage of that it does not easily eliminate the high collinearity coefficient.

Elastic Net uses Lasso as well as Ridge Regression regularization in order to remove all unnecessary coefficients not the informative ones.

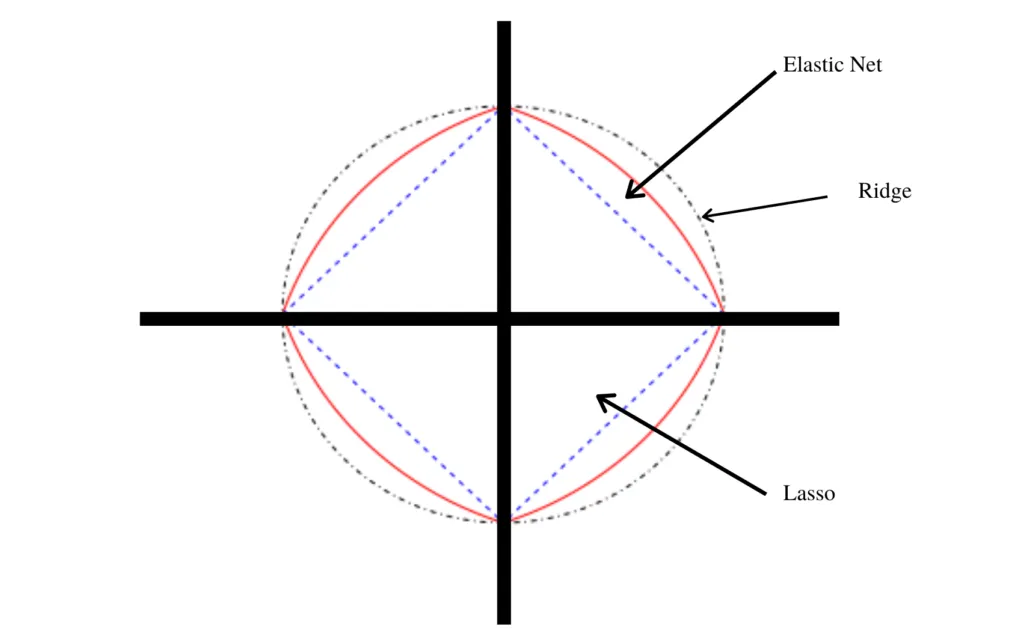

Difference between Ridge, Lasso and Elastic Net

- Small bias can lead to the disturbance of prediction as it is dependent on a variable. Therefore Elastic Net is better than ridge and lasso in handling collinearity.

- Also, when it comes to complexity, Elastic Net performs better than ridge and lasso regression, the number of variables is not significantly reduced. Here, incapability of reducing variables causes declination in model accuracy.

- Ridge and Elastic Net could be considered better than the Lasso Regression as Lasso regression predictors do not perform as accurately as Ridge and Elastic Net. Lasso Regression affects accuracy when relevant predictors are considered as non zero.

In general, to avoid overfitting, the regularized models are preferable to a plain linear regression model. In most scenarios, ridge works well. But when, you’re not certain about using lasso or elastic net, elastic net is a better choice because, lasso removes strongly correlated features.

Recommended for you:

MOST COMMENTED

Tutorial

Important Methods in Matplotlib

Machine Learning

Bias and Variance Tradeoff Machine Learning

Tutorial

Multiclass and Multilabel Classification

Machine Learning

Reinforcement Learning in Machine Learning

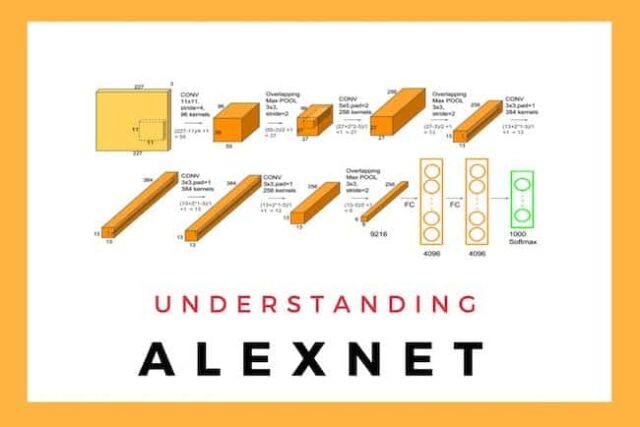

Deep Learning

Alexnet Architecture Code

Machine Learning

Machine Learning Models Explained