Convolution Neural Network

The era of Convolution Neural Network is at its peak in the 20th century and it is said that it is going to rise more by up to 10% in upcoming years. The reason behind that increase is data. In 2020 according to some resources, it is that that there is almost 90% of data that we used is unlabelled data, and from that 90%, almost 40–50% of data is in the form of images. whether you upload an image on your social media or you upload a post on twitter the image data is everywhere. we need some intelligent algorithms using which we can find the relative meaning from that data.

CNN is mainly used for image classification, segmentation, and also for other co-related fields. a CNN can predict the objects inside an image by just looking at it like we humans do.

Introduction on VGGNet

The full name of VGG is the Visual Geometry Group, which belongs to the Department of Science and Engineering of Oxford University. It has released a series of convolutional network models beginning with VGG, which can be applied to face recognition and image classification, from VGG16 to VGG19. The original purpose of VGG’s research on the depth of convolutional networks is to understand how the depth of convolutional networks affects the accuracy and accuracy of large-scale image classification and recognition. -Deep-16 CNN), in order to deepen the number of network layers and to avoid too many parameters, a small 3×3 convolution kernel is used in all layers.

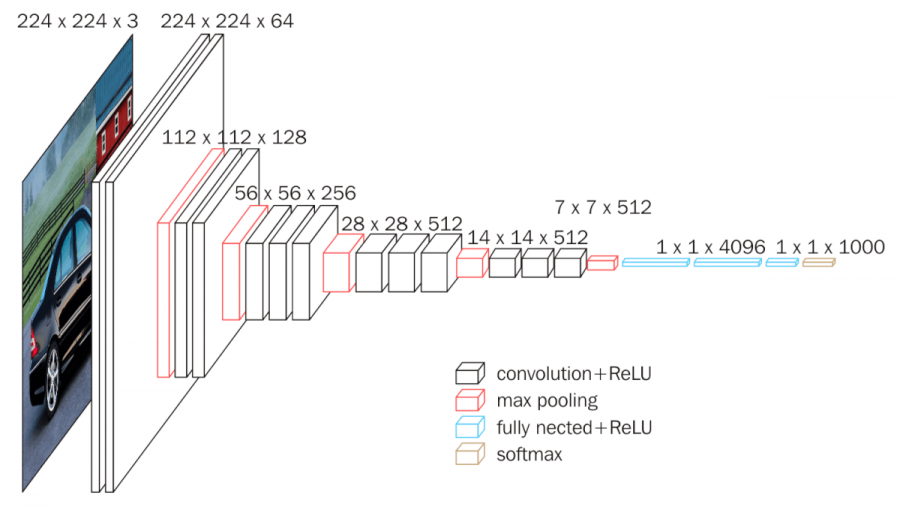

VGG Architecture

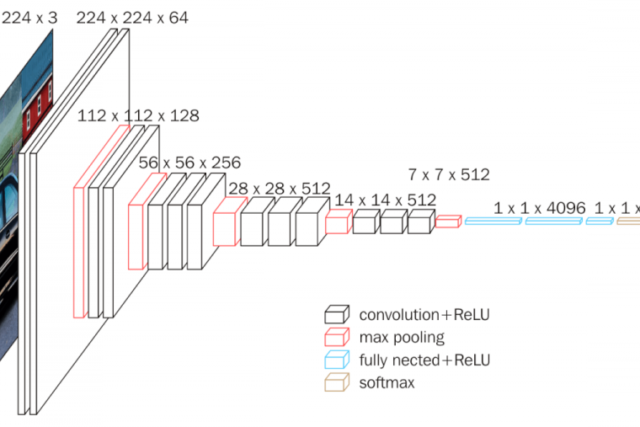

The input to VGG based convNet is a 224*224 RGB image. Preprocessing layer takes the RGB image with pixel values in the range of 0–255 and subtracts the mean image values which is calculated over the entire ImageNet training set.

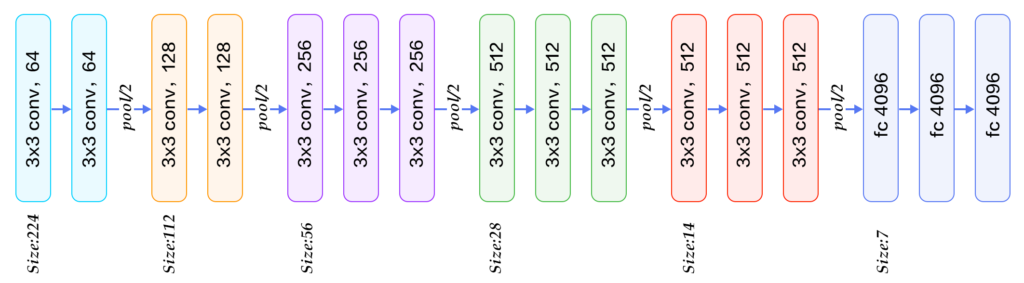

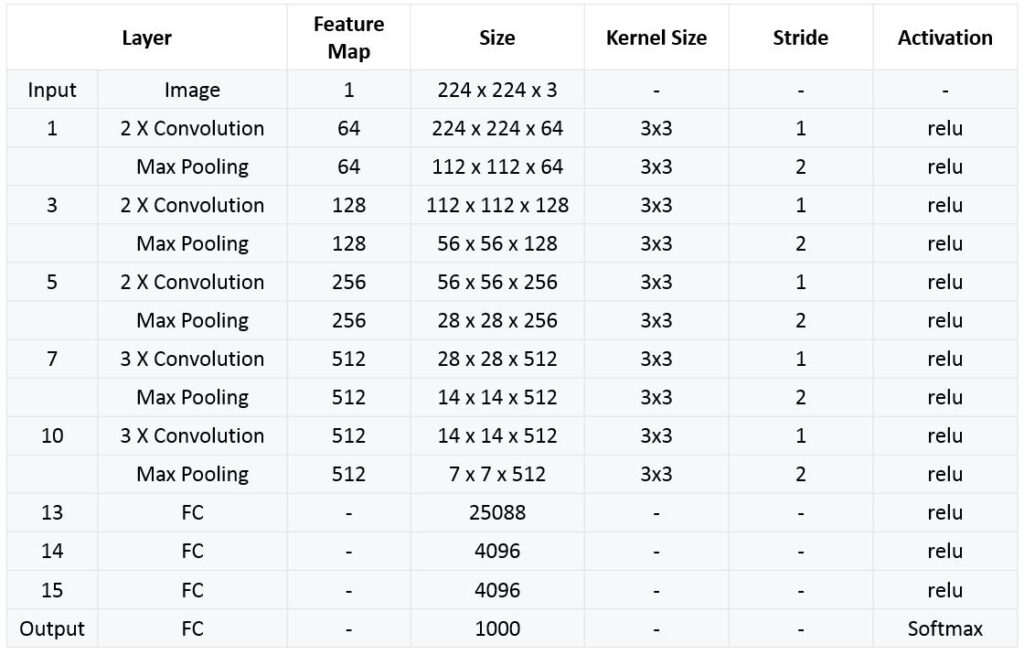

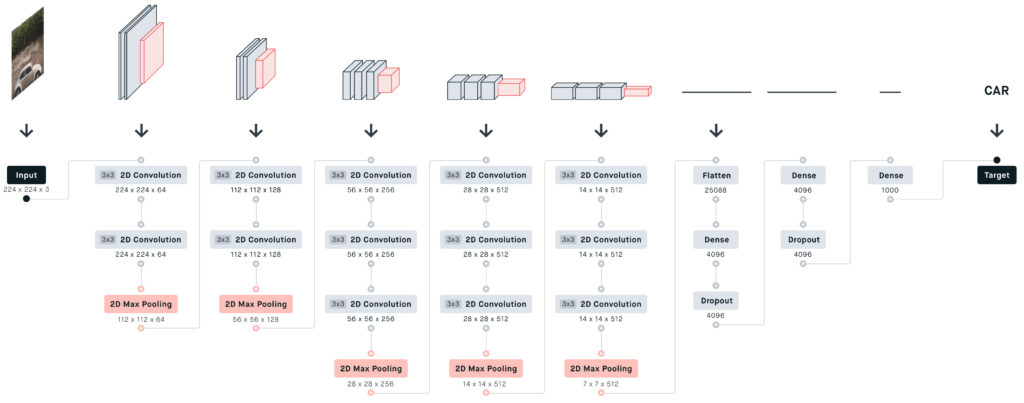

The input images after preprocessing are passed through these weight layers. The training images are passed through a stack of convolution layers. There are total of 13 convolutional layers and 3 fully connected layers in VGG16 architecture. VGG has smaller filters (3*3) with more depth instead of having large filters. It has ended up having the same effective receptive field as if you only have one 7 x 7 convolutional layers.

Another variation of VGGNet has 19 weight layers consisting of 16 convolutional layers with 3 fully connected layers and same 5 pooling layers. In both variation of VGGNet there consists of two Fully Connected layers with 4096 channels each which is followed by another fully connected layer with 1000 channels to predict 1000 labels. Last fully connected layer uses softmax layer for classification purpose.

VGG16 contains 16 layers and VGG19 contains 19 layers. A series of VGGs are exactly the same in the last three fully connected layers. The overall structure includes 5 sets of convolutional layers, followed by a MaxPool. The difference is that more and more cascaded convolutional layers are included in the five sets of convolutional layers .

Each convolutional layer in AlexNet contains only one convolution, and the size of the convolution kernel is 7 7 ,. In VGGNet, each convolution layer contains 2 to 4 convolution operations. The size of the convolution kernel is 3 3, the convolution step size is 1, the pooling kernel is 2 * 2, and the step size is 2. The most obvious improvement of VGGNet is to reduce the size of the convolution kernel and increase the number of convolution layers.

Using multiple convolution layers with smaller convolution kernels instead of a larger convolution layer with convolution kernels can reduce parameters on the one hand, and the author believes that it is equivalent to more non-linear mapping, which increases the Fit expression ability.

Key Features of Vgg 16

- It is also called the OxfordNet model.

- number 16 refers that it has a total of 16 layers that has some weights.

- It only has conv and pooling layers in it.

- always use a 3 x 3 kernel for convolution.

- 2×2 size of the max pool.

- has a total of about 138 million parameters.

- trained on ImageNet data.

- it has one more version of it Vgg 19, a total of 19 layers with weights.

MOST COMMENTED

Tutorial

Important Methods in Matplotlib

Machine Learning

Bias and Variance Tradeoff Machine Learning

Tutorial

Multiclass and Multilabel Classification

Machine Learning

Reinforcement Learning in Machine Learning

Deep Learning

Alexnet Architecture Code

Machine Learning

Machine Learning Models Explained