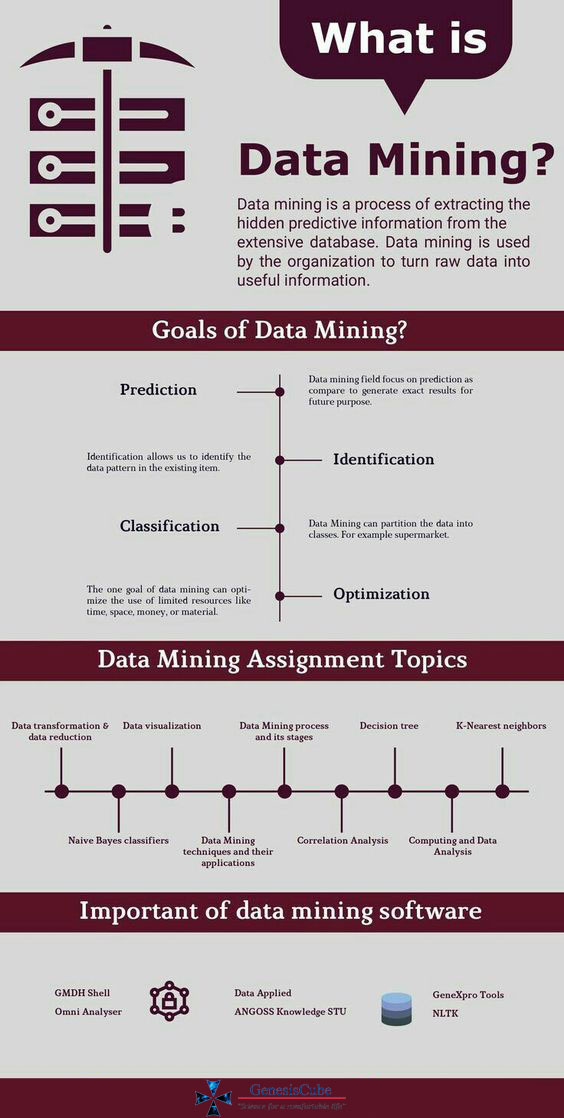

Data mining, also known as knowledge discovery in data is the process of analyzing large amounts of data in order to identify patterns, anomalies and correlations. People use this type of data analysis to help predict the outcome of business decisions such as moves to increase revenue or reduce risk.

As businesses rely more on digital processes, they accumulate a wealth of data. Companies today can track everything from sales transactions to internal processes. It improve organizational decision-making through insightful data analyses. The data-mining techniques that underpin these analyses can be divided into two main purposes; they can either describe the target dataset or predict outcomes through the use of machine learning algorithms. These methods are used to organize and filter data, surfacing the most interesting information.

As a branch of data science, it represents an intersection of statistics, artificial intelligence and machine learning.

History

The statistical beginnings of data mining were set into motion by Bayes’ Theorem in 1763 and discovery of regression analysis in 1805. The concept of it originated in the 1990s and is a result of evolution in database and data warehouse technologies. Previously, NASA and similar organizations were the only ones able to analyze big data. But the increased processing power and speed of today’s computer systems allow industries to uncover correlations and patterns in even vast quantities of data.

Data Mining vs. Data Analytics

Data mining is predominantly seen as a specific aspect of data analytics that automates the analysis of large data sets to discover information that otherwise couldn’t be detected. That information can then be used in the data science process and in other analytics applications.

Now, applications are often served by data lakes that store both historical and streaming data and are based on big data platforms like Hadoop, NoSQL databases or cloud object storage services.

Data Mining vs. Machine Learning

They are both useful for detecting patterns in large data sets.

Data mining is the process of finding patterns in data. It helps to answer questions we didn’t know to ask by proactively identifying non-intuitive data patterns through algorithms. However, the interpretation of these insights and their application to business decisions still require human involvement.

With machine learning, computers learn how to determine probabilities and make predictions based on their data analysis. While machine learning sometimes uses data mining as part of its process, it doesn’t require frequent human involvement on an ongoing basis.

Importance

It is a crucial component of successful analytics initiatives in organizations. The information it generates can be used in business intelligence and advanced analytics applications that involve analysis of historical data, as well as real-time analytics applications.

The primary benefit is its power to identify patterns and correlations in large volumes of data from multiple sources. It can look for correlations with external factors; while correlation does not always indicate causation, these trends can be valuable indicators to guide product, channel, and production decisions. The same analysis benefits other parts of the business from product design to operational efficiency and service delivery.

What’s more, it can act as a mechanism for “thinking outside the box”.

Use cases

- Companies that design, make, or distribute physical products can pinpoint opportunities to better target their products by analyzing purchasing patterns coupled with economic and demographic data.

- The speed with which data mining can discern patterns and devise projections helps companies better manage their product stock and operate more efficiently.

- Manufacturers can track quality trends, repair data, production rates, and product performance data from the field to identify production concerns. They can also recognize possible process upgrades that would improve quality, save time and cost, improve product performance.

- Businesses generate an enormous amount of data through loyalty programs. Data mining allows retailers to build and enhance customer relationships through that data. For example, by clustering customers according to basket totals, shopping frequency, retailers can offer customers discounts. This provides the customer with an incentive to shop.

- It helps businesses understand consumer behaviors, track contact information and leads, and engage more customers in their marketing databases.

Data Mining Techniques

It works by using various techniques to turn large volumes of data into useful information. Here are some of the most common ones:

- Clustering

- This approach is aimed at grouping data by similarities. For example, when you mine your customer sales information combined with external consumer credit and demographic data, you may discover that your most profitable customers are from midsize cities.

- Regression

- One of the mathematical techniques offered, regression analysis predicts a number based on historic patterns projected into the future. Various other pattern detection and tracking algorithms provide flexible tools to help users better understand the data and the behavior it represents.

- Association

- Association is a rule-based method for finding relationships between variables in a given dataset. These methods are frequently used for market basket analysis, allowing companies to better understand relationships between different products and consumption habits of customers. That enables businesses to develop better cross-selling strategies and recommendation engines.

- Neural Networks

- Primarily leveraged for deep learning algorithms, neural networks process training data by mimicking the interconnectivity of the human brain through layers of nodes.

- Decision tree

- This technique uses classification or regression methods to classify potential outcomes based on a set of decisions. As the name suggests, it uses a tree-like visualization to represent the potential outcomes of these decisions.

- K-Nearest Neighbor

- This is a non-parametric algorithm that classifies data points based on their proximity and association to other available data. K-Nearest Neighbor assumes that similar data points can be found near each other. As a result, it seeks to calculate the distance between data points, usually through Euclidean distance, and then it assigns a category based on the most frequent category or average.

The techniques will be applied somewhat automated according to how the question is posed.

How does Data Mining work?

In a nutshell, the process can be broken down into four basic steps:

- Problem understanding

- The business decision-maker needs a general understanding of the domain they will be working in.

- Data gathering

- Link your internal systems and databases through their data models and various relational tools or gather the data together into a data warehouse. This includes any data from external sources that are part of your operations, like field sales or service, IoT, or social media. Seek out and acquire the rights to external data including demographics, economic data, and market intelligence. Bring them into your data warehouse and link them to data mining environment.

- Data preparation

- This part of the process is sometimes called data wrangling. Some of the data may need cleaning to remove duplication, inconsistencies, incomplete records, or outdated formats. Data preparation may be an ongoing task as new projects or data from new fields of inquiry become of interest.

- User training

- Provide formal training to your data miners as well as some supervised practice as they start to get familiar with these powerful tools. Continuing education is also a good idea once they have mastered the basics and can move on to more advanced techniques.

Challenges

- Big Data

- Data is being generated at a rapidly accelerating pace. Therefore, modern data mining tools are required to extract meaning from Big Data, given the high volume, high velocity, and wide variety of data structures as well as the increasing volume of unstructured data.

- User competency

- While highly technical, Analysis tools are now packaged with excellent user experience design so virtually anyone can use these tools with minimal training. However, to fully gain the benefits, the user must understand the data available and the business context of the information they are seeking. They must also know, how the tools work and what they can do. It is a learning process and users need to put some effort into developing this new skill set.

- Data quality and availability

- With masses of new data, there are also masses of incomplete, incorrect, misleading, fraudulent, and damaged data. The tools can help sort this all out, but the users must be continually aware of the source of the data and its reliability.

Recommended for you:

Data Lake vs Data Warehouse

Thick Data vs Big Data

MOST COMMENTED

Tutorial

Important Methods in Matplotlib

Machine Learning

Bias and Variance Tradeoff Machine Learning

Tutorial

Multiclass and Multilabel Classification

Machine Learning

Reinforcement Learning in Machine Learning

Deep Learning

Alexnet Architecture Code

Machine Learning

Machine Learning Models Explained